Work on the brain-computer interfaces (BCI)was perhaps kickstarted by Hans Berger who discovered the electrical activity of the human brain and went on to develop the field of electroencephalography (EEG), in 1924.

The field, previously the exclusive reserve of scientists and researchers has seen a boost in recent years, following advancements in Artificial intelligence (AI), and perhaps also the curiosity to understand how human thoughts, cognition and consciousness emerge.

In a bid to contribute to and understand the fundamentals of human intelligence with a view to creating machines that can approximate human intelligence, thought and decision-making, Meta announced that it has made progress towards a non-invasive AI system that decodes images from human brain activities. *

The announcement reads in part: “This AI system can be deployed in real-time to reconstruct, from brain activity, the images perceived and processed by the brain at each instant”. And further states: “This opens up an important avenue to help the scientific community understand how images are represented in the brain, and then used as foundations of human intelligence. (In the) longer term, it may also provide a stepping stone toward non-invasive brain-computer interfaces in a clinical setting that could help people who, after suffering a brain lesion, have lost their ability to speak”**. And that may as well only be the beginning, as I am certain there will be applications for this technology outside of clinical studies and general research.

Neuralink Corp., founded by Elon Musk and a team of seven scientists and engineers, launched in 2016 is a US-based neurotechnology company that is developing implantable brain–computer interfaces. And is reported to have received the approval of the Food and Drug Administration (the United States regulator for the food and pharmaceutical industry) to conduct its first, in-human clinical study – following a period of non-human tests.***

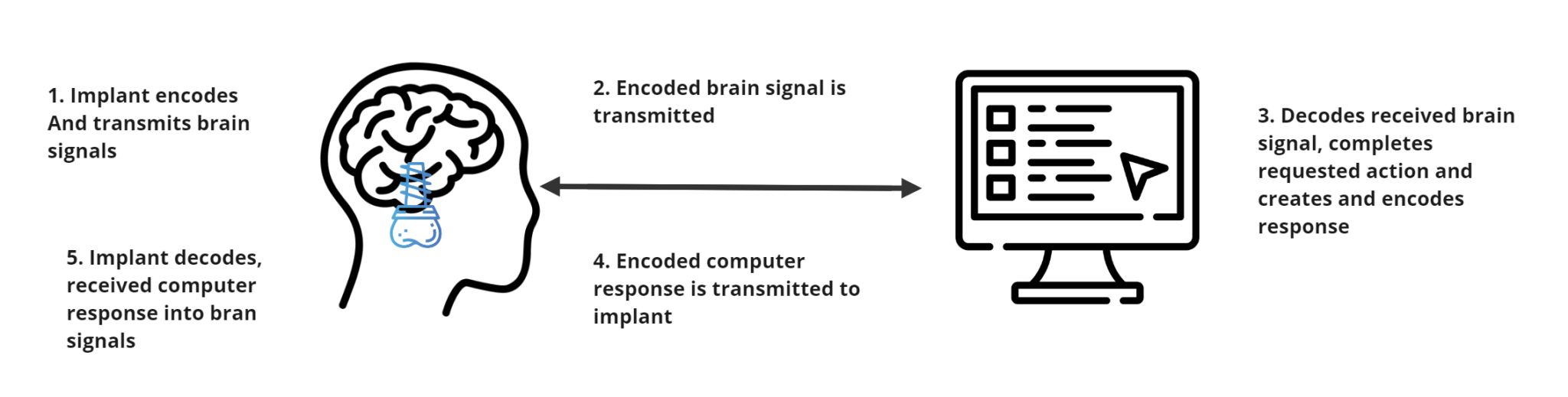

Whilst Meta’s approach involves unmasking non-invasive methods for scientists (and eventually product designers) to interact with the human brain, Neural Link’s approach which is being tested is invasive and requires placing an implant that will serve as an encoder and transmitter of signals from the brain’s activities to an external receiver plugged into a highly specialised computer. The implant in turn might be capable of receiving transmissions from the highly specialised external computer, decode these and convert these into signals directly communicated to the brain.

With advances in the brain-computer interface, will likely come developments that will:

- remove the need for existing and somewhat cumbersome interfaces for interacting with data and communicating in general

- a means for the empowerment/enablement for people living with impairments to participate in society without the encumbrances of their physical impairments given the direct connection between their brains and communication and action tools and devices that previously required physical access

- a general understanding of and possibly extension of the functions of the human brain

Whilst the full implications of these advancements are being debated, policies to guide the use of such are yet to be crafted – either because as is often the case innovation leapfrogs policy and governance or because the general implications of these advancements are yet to be fully understood or appreciated, one wonders if these advancements wouldn’t lead to further divide amongst the world’s population, similar to the digital divide occasioned by the evolution of digital.

List of references

* Meta Makes Progress Towards AI System That Decodes Images From The Brain Activity: https://www.neatprompts.com/p/meta-makes-ai-system-decodes-images-brain-activity?utm_source=www.neatprompts.com&utm_medium=newsletter&utm_campaign=meta-s-mind-reading-ai

** Towards a Real-Time Decoding of Images from Brain Activity: https://ai.meta.com/blog/brain-ai-image-decoding-meg-magnetoencephalography/

***Elon Musk’s brain implant company Neuralink announces FDA approval of in-human clinical study: https://www.cnbc.com/2023/05/25/elon-musks-neuralink-gets-fda-approval-for-in-human-study.html